Encapsulated Neural Network Libraries

There’re many great open source libraries for neural networks and deep learning. Some of them try to wrap every function they provide into an uniform interface or protocol (so-called define and run, e.g. caffe and tensorflow frontend), such well encapsulated libraries might be easy to use but difficult to change. As the rapid development of deep learning, it becomes a common need for people in the field to experiment new ideas beyond those encapsulations, often I found that the very interface or protocol I need is just the programming language itself.

Pytorch does a great job on that, it doesn’t make unnecessary assumptions (like it’s not too late to infer the input shape of a layer at compile/run time) and is convenient for code-level extensions. Before I found Pytorch I wrote a library myself for the purpose of going from idea to result with the least possible delay, followings are some implementation notes.

Train and Test, Models and Parameters

Is the same network used for both training and test? If it were in the last century, the answer probably was yes. But no. For instance, recently developed layers such as batch normalization behave differently at training and test time, and sometimes people only need some intermediate result as feature representations for other tasks at test time. Here’re a few examples of different architectures in training and test, Deep Learning Face Attributes in the Wild, FractalNet: Ultra-Deep Neural Networks without Residuals, Auto-Encoding Variational Bayes.

So network architectures can be different, computations can be different, the only thing that connects training and test is (a subset of) the parameters. In terms of coding, we totally should separate the training and test networks as two different models, the training specifies how to search for the parameters, the test specifies how to use the parameters. In practice I found such decoupling of training and test, models and parameters useful for other machine learning applications as well.

Implementing a Neural Network Library

The neural network library I wrote follows the following key points, hopefully it would help sorting out the related concepts in terms of implementation.

- The notion of a network breaks down into two types of functions, one is for optimizing an objective function w.r.t some parameters through an optimizer (training), the other computes the output for some input given the parameters (test).

- Function are defined by stacking up layers.

- A layer can have its parameter, input and output, it may behave differently for training, test or else (e.g. whether the gradient is disconnected or not).

- Parameters are defined independently can be shared between layers and functions.

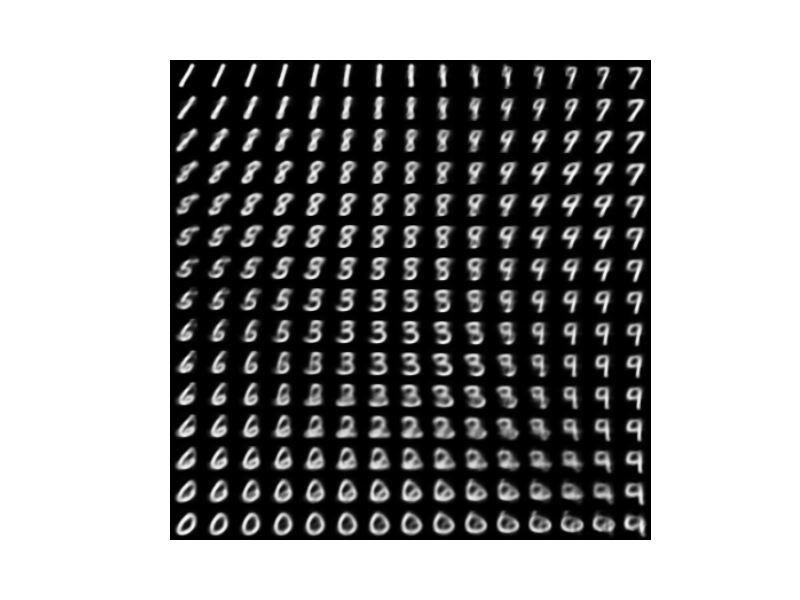

Variational Autoencoder Example

Here are some samples generated by a variational autoencoder implemented with my own library.